What are AI Guardrails & Responsible AI?

Guardrails facilitate responsible AI, guiding users to good results from AI and putting a barrier up to prevent harm. Guardrails are the safety barrier used by AI systems to ensure the system remain on target for their intended use and do not cause harm.

Responsible AI

As with most things in life, AI can be abused, and AI governance is part of responsible AI. AI can cause harm, whether from bad actors with malicious intent or accidental harmful outcomes. It is possible, through prompt hacking, to get AI to do things it was not intended to do, such as issue refunds inappropriately or expose confidential data held within the training data inside the model. Prompt injection attacks can pose a security threat.

Examples:

- Revealing sensitive information from inside the model

- Producing illegal or offensive output

- Being manipulated to act outside societal norms or values

- Discrimination based on gender, race, age, etc.

- Political or discriminatory bias

- Dangerous hallucinations causing financial loss or other harm

The output, when used in certain fields such as legal or health, needs to be compliant with laws and regulations.

An AI guardrail is implemented as a software barrier that prevents undesired content from being generated, extracted, or actioned by the AI, thus preventing it from doing harm. It is intended to guide the user safely within ethical and legal boundaries while also blocking harmful intent or harmful output. Guardrails are sets of predefined rules, limitations, and operational protocols that govern the behavior, inputs, and outputs of the system.

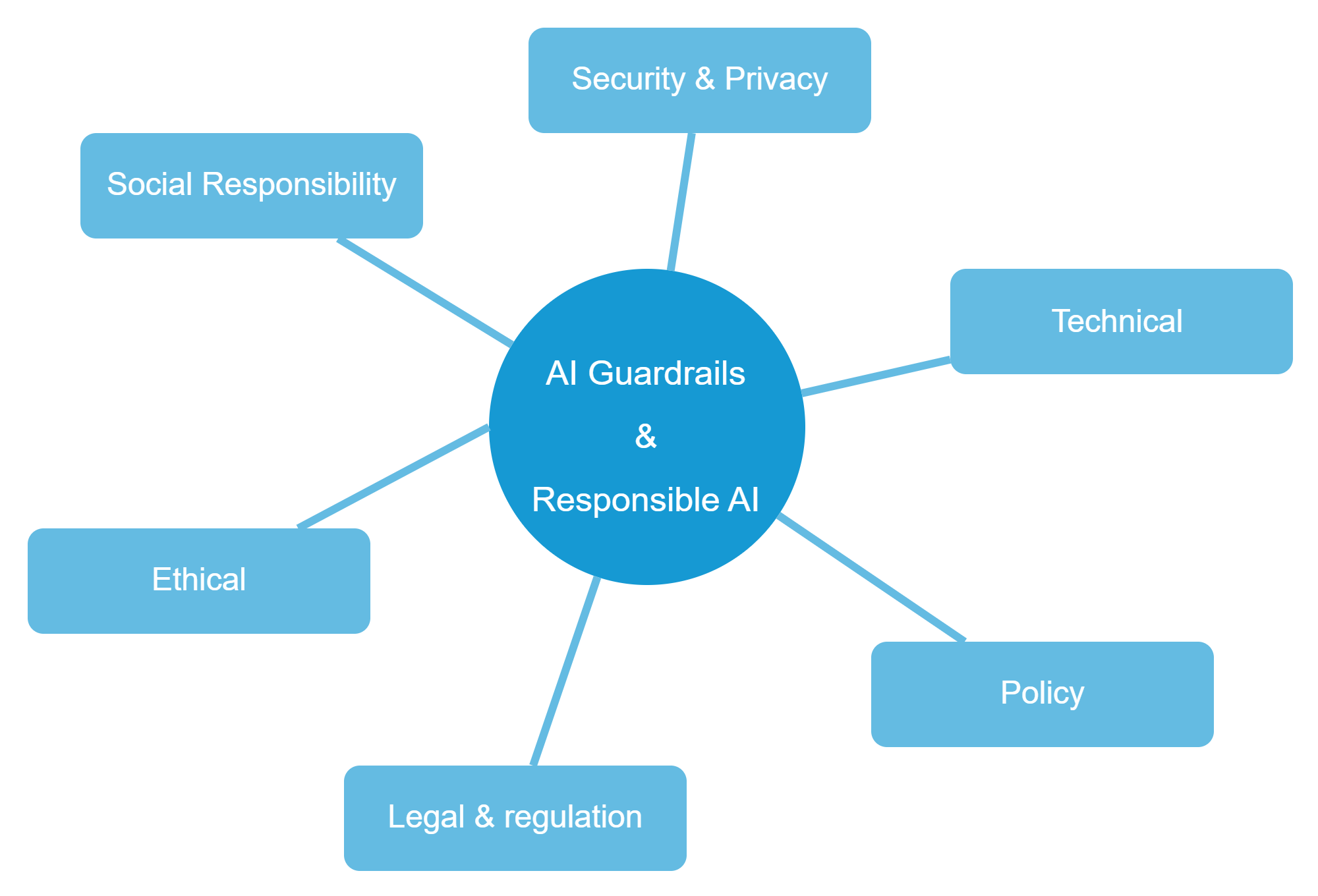

Categories of Guardrails

Responsible AI mitigates risk by employing a combination of the following types of guardrails:

Guardrails are mostly discussed in the context of generative AI, but they are also applicable in other fields of AI. Well-designed guardrails enable organizations to enjoy the full potential of generative AI while mitigating associated risks.

Guardrails may be of the form of process and policy followed and applied by the creators and managers of the AI models and solutions, but are more often referred to as the software stops built into the model and platform.

There are many other types of guardrails, for example to ensure JSON output is valid, or Open API specifications are checked against etc.

Ethical & Social Responsibility Guardrails

Throughout the process of creating and operating AI solutions, there are ethical considerations. These need to be formally addressed to ensure the AI engine or application does not act unethically. This may be implemented through both technical measures and processes around the management of the products.

Technical

These guardrails are built into the AI software code:

Error reporting UI for users to report errors and dangerous output, feeding back to model generation and governance.

Protocols and heuristics to prevent the AI system from exposure to cyber attacks of all kinds.

Rule validation, ensuring business rules are followed in the system's inputs and outputs.

General output and input validation to ensure the AI behaves as intended and does not generate or accept dangerous content. Inappropriate content filters, offensive language filters, Prompt injection shields, sensitive content scanning.

Watermarking content to make it clear it was AI-generated for downstream consumers.

Relevance validation to ensure the output is relevant.

Output validation - url checking etc

Fact checking, to cross reference facts.

Policy Guardrails

How is data collected and integrated into the model, and what kind of visibility and accountability should be provided for the source?

Practicing good ethical controls for AI fairness and accountability.

Compliance, ensuring AI laws and regulations are adhered to throughout the lifecycle of the AI model and application. This includes high-risk applications, such as healthcare and autonomous machinery, where additional regulation may exist.

Accessibility, ensuring AI remains accessible to those with disabilities.

Example of policy guardrails for proprietry system, could be avoiding mentioning competitors in chat bots to customers.

Legal Guardrails

AI laws already exist, with Europe leading the way, followed by California in the US. More laws and regulations will follow, and some general laws already apply to AI, so it is essential to ensure these are not violated. Much like unit tests are used in software development to automatically check software behavior, AI requires similar measures to ensure compliance with the law. These guardrail measures may be integrated into processes and may not necessarily be technical in their implementation.

Trust and Responsible AI

Guardrails are a vital part of implementing "Responsible AI." Major vendors are investing heavily in defining Responsible AI, as it is key to AI's acceptance in broader society beyond the tech world. These large corporations invest in responsible AI to foster trust and achieve financial rewards.

The AI market recognizes it needs user trust for widespread adoption and that scandals could hinder AI adoption, impacting future financial benefits. It is also essential to establish Responsible AI practices so customers can offload their responsibilities to large vendors. These vendors are better positioned to provide protections with resources and expertise unavailable to smaller vendors and users. More AI laws and regulations are coming, making it advantageous for AI vendors to lead in compliance rather than react to regulations. Hence, there is strong interest in establishing these practices during AI's formative stage.

Other stakeholders, such as consumer groups, government agencies, global organizations, and lawmakers, are involved in these early stages, working to establish good practices, future regulations, and industry standards. It is in everyone's interest to build trust in this new generation of technology.