Understanding Large Language Models: The Core of Today's Generative AI Boom

Having a model in your head when working with generative AI and LLM platforms is a great help. This posts paints an overview of AI models and introduces common terms every new comer to AI platforms encounters.

At the core of the current generative AI boom are AI models—specifically, Large Language Models (LLMs). You may have heard about ChatGPT in mainstream media, but there are also models like Mistral, Claude, Gemini, Llama, and thousands more.

AI solutions you create or use are sitting on AI models. It is helpful to have an understanding of what models are, as selecting a model that fits the needs of the use case is one of the first steps when you set out to build an AI application with code or use one of the plethora of AI zero code solutions such as Zapia platform or Make.

I am an AI veteran and see that only now have we reached a point where "compute" power and accessibility have caught up with the science of machine learning. Today, machine learning and neural networks are commonly grouped under the general term "AI."

The Importance of Understanding LLMs

To effectively work with AI, it’s essential to have a conceptual understanding of what LLMs are and how they operate. You don’t need a degree in mathematics to grasp the basics—a high-level understanding can be incredibly helpful when navigating the AI landscape.

Neural Networks and LLMs

LLMs are built on neural networks, which you can imagine as a mathematical model, millions or billions of nodes connected by links. There’s an input layer of nodes and an output layer, with mathematic "signals" passed through these connections. Different types of neural networks are suited for tasks like classification, image recognition, sentiment analysis, and text completion. LLMs, specifically, use a type of neural network called Transformers.

Transformers

You may have seen the term "Transformers" on sites like Hugging Face, where it’s commonly mentioned in AI discussions. Transformer models are a type of neural network used in Natural Language Processing (NLP). They excel at understanding contextual data, where the meaning of words depends on their surrounding context, sentences, and even entire paragraphs. The Transformer architecture is adept at learning these patterns, making it ideal for language-based tasks.

Embeddings

In LLMs, words (often referred to as tokens) are mapped into a multi-dimensional space through a process called embedding. Embeddings assign each word a position in this space (embed them), placing it near other words with similar meanings. Imagine a 3D space with multiple axes—each word is embedded near related terms and further refined by positional encoding, which helps differentiate words that are the same, but with different meanings based on context (position of that word in the sentence).

Next the relevance of the word is taken into account in relation to other words. This is a relevance vector, and becomes the joining lines in the mesh of words in the multidimensional space. Each of those vectors has a weight depending on relevance to other words. The length of the linking lines between the nodes determines the shape of the word matrix and is used when generating output.

In another post we will talk about adding additional training to models by adjusting the positions of the nodes and links in the matrix using an operation known as Fine Tuning. This is only possible with models that have open weights, that available to adjust by the end user. Some models have closed weights and cannot be altered. RAG, talked about next, can be a help to change the output for those locked down models. Open weights should not be confused with Open Source models. An open-source AI model is made publicly available for anyone to access, use, and modify. Unlike proprietary models, open-source AI models share their underlying code, training data, and architecture, allowing developers and researchers to study how they work, customize them for specific applications, and contribute improvements. This transparency fosters innovation and collaboration across the AI community, helping accelerate advancements in AI technology. Many open-source models, such as those found on platforms like Hugging Face, can be freely used, adapted, and integrated into various projects, making AI more accessible and flexible for a broad range of users and applications.

Embeddings are crucial in LLMs, especially for techniques like Retrieval-Augmented Generation (RAG), which use vector databases to improve output relevance. There are various embedding algorithms, including:

- Word2Vec by Google

- GloVe (Global Vectors for Word Representation)

- BERT (Bidirectional Encoder Representations from Transformers)

- ELMo (Embeddings for Language Models)

These techniques also apply to graphs and images. AI platforms often offer different embedding options to choose from.

Neural Networks in Practice

Explaining the full operation of neural networks is complex, but this overview provides enough insight for a basic understanding in applied AI. For those interested in diving deeper, there are excellent resources available.

Running Models Locally

Some models can be downloaded and run on local machines. Ollama is a good place to start experimenting. Note, however, that AI models require significant computational power. While a small model may run on a standard CPU, performance improves greatly with a Graphics Processing Unit (GPU). For industrial applications, specialized chipsets like Neural Processing Units (NPUs) are optimized for neural network workloads. Soon, we may see NPUs in laptops alongside CPUs and GPUs.

Dedicated hardware can be costly, so many people opt for cloud services, which offer access to powerful AI models via APIs.

Model Size

The size of an AI model, often measured in parameters (like 1 billion or 175 billion parameters), represents the complexity and capacity of the neural network within the model. These parameters are weights in the model that determine how it processes information. Weights are the glue that holds the model together in the shape it inhabits.

Larger models, with more parameters, can capture more nuanced details and relationships within data, enabling them to perform complex tasks such as generating coherent and contextually accurate language or understanding subtle emotional cues in text. For instance, large models like OpenAI's GPT-3, with 175 billion parameters, can provide highly sophisticated responses across a wide range of topics.

The trade-off, however, lies in their demand for computing power and memory, making them costly to train, run, and maintain.

Smaller models, are faster and more energy-efficient, making them suitable for less demanding tasks or resource-constrained environments (like mobile devices). However, these smaller models can lack the depth or accuracy of larger models, particularly in complex applications that require a nuanced understanding of context or high-level reasoning.

There is another post where I explore model size in a little more detail.

Ensemble Models

We talked above about model sizes, big models are overkill for many simple business AI applications where the scope of the task is constrained. There is an analogy in normal life, where you employ specialists to do specific tasks in a business as a specialist will do the task better than a generalist. It is important to talk about ensemble models as it is likely that AI solutions will use them more in the future.

Ensemble models combine multiple models to improve overall performance, accuracy, robustness and efficiency.

Rather than relying on a single model, ensemble methods leverage the strengths of various models, each of which may specialize in different aspects of a task or provide unique insights. The idea is that while individual models might make mistakes or have certain biases, combining them allows those weaknesses to be minimized, leading to better generalization on new data. Depending on number of models, it also reduces operating costs to delegate subtasks onto smaller cheaper models.

Where cost is not the concern, an ensemble might combine a few smaller models trained on different data subsets or models that use different algorithms altogether (such as decision trees, neural networks, or support vector machines).

Ensemble methods include;

- Bagging (where multiple versions of a model are trained on bootstrapped data samples),

- Boosting (where models are sequentially trained to focus on previous models' mistakes)

- Stacking (where models’ outputs are combined by a “meta-learner”)

Ensembles are good where high accuracy is crucial and errors are costly—such as finance for risk assessment, healthcare for diagnostic predictions, or autonomous driving for decision-making under varying conditions.

Voting is a method used to combine the outputs of multiple models to make a final prediction. Voting is especially common in classification tasks, where each model in the ensemble independently predicts a class label, and the ensemble then decides on the most likely class by "voting" among these predictions.

The drawback is that ensemble models can be computationally heavy and harder to interpret, with extra complexity as they often involve coordinating multiple models simultaneously. However, the significant boost in reliability and performance makes ensembles a go-to approach for complex tasks where individual models may fall short.

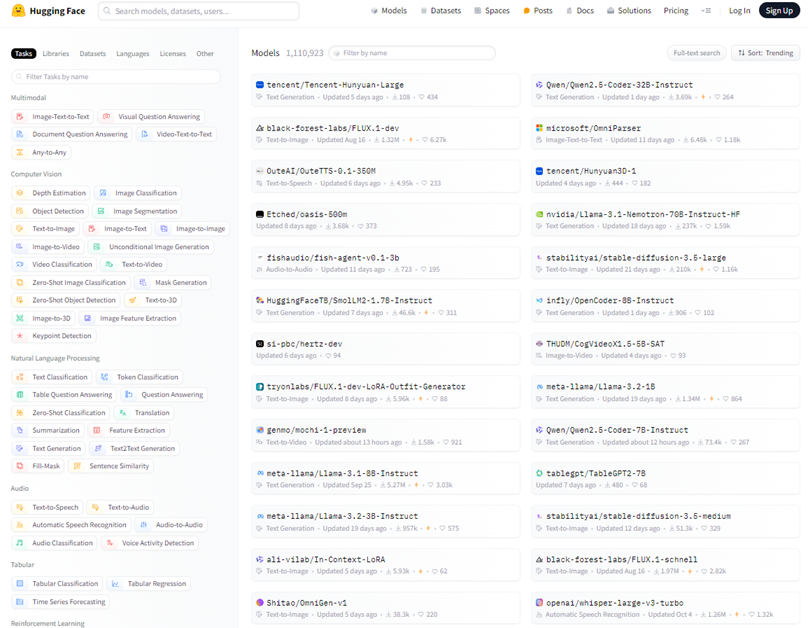

Popular Foundation Models

Foundation models are generalist models that have been trained on a wide variety of training data, making them good all round models that can be used in a wide variety of applications. Most of what you hear around AI models in mainstream channels is referring to foundation models. Models take a lot of resources, in terms of time, energy and data to train. The big tech companies train these foundation models, that can then be fine-tuned to specific applications, or used as they are.

Here are some of the bigger name foundation models/platforms you can explore, AI is rapidly expanding and moving this list is built in 2024, every few months new players are in the game:

ChatGPT: Developed by OpenAI. Link: ChatGPT

Mistral AI: Developed by Mistral AI. Link: Mistral AI

Claude AI: Developed by Anthropic. Link: Claude AI

Gemini: Developed by Google DeepMind. Link: Gemini

Llama: Developed by Meta AI. Link: Meta AI

Hugging Face Models: Developed by Hugging Face. Link: Hugging Face Models

Grok: Developed by xAI. Link: Grok

StableLM: Developed by Stability AI. Link: StableLM

Phi-3: Developed by Microsoft. Link: Phi-3

Nemotron-4: Developed by NVIDIA. Link: Nemotron-4

This basic overview I hope helped introduce some of the concepts and terminology that you will encounter when getting started with AI. Is is part of a series of posts on AI concepts.