Understanding Context Windows in LLMs

Ever wondered why the prompt size is limited when prompting an AI model, or why it seems to forget what it was told earlier in the interactions? Context windows provide working memory to AI.

This explanation will help with visualising and conceptualising, the concepts. Once you grasp it, it’s a great starting point for deeper exploration.

What Are Context Windows?

When I first started with AI, context windows were much smaller, and my prompts often led to some very strange results. Looking back, I realise it was because I had no understanding of what context was or how it worked. I now see that grasping this concept is fundamental to success. Let me explain...

When prompting AI models, the conversation flow between user and prompt engine needs to be persisted, otherwise each successive prompt would have no awareness of what came before it in the exchange. To allow follow-on prompts, building on previous prompts and responses, such as, "summarise that answer", the history of the user-AI conversation exchange needs to be retained in session.

The "memory" that maintains this history is named the context window and can be thought of as the equivalent of working memory in humans.

It can be thought of as a window frame sat over the context (timeline) of the AI back and forth interaction, hence it is referred to as a context window. The interactions of user prompts and the AI responses flow past the window frame and out the other side. Now at any point in time, the AI engine only has access to what it can see through that window frame, a fragment of the overall conversation. Maybe a visualisation would help...

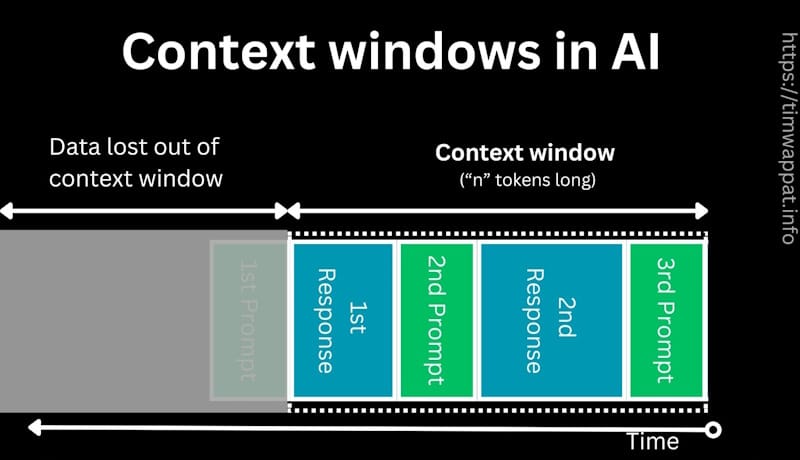

In the drawing, you can see the context window (memory buffer) on the right and the space outside it on the left. User prompts and AI responses are arranged along the timeline in the diagram, one after another. This simplified layout helps with visualisation and understanding the basic concept.

As each prompt and response is added to the window, the space gradually fills up until the context window is full. In the diagram, you can see that the first prompt has fallen out of the context window.

Once the first prompt is outside the context window, the AI can no longer "remember" its content for future responses. Occasionally, some information from the first prompt may still appear in responses if it was included in subsequent prompts or answers within the window. However, the influence of the first prompt will be greatly diminished.

Context window size

The size of the context window defines how much information (tokens) the AI can "see" at any given moment. It contains not just the text prompts that were created by the user, it also holds:

- AI responses from previous prompts in the session/chat

- Any uploaded documents

- Previous user prompts

- System prompts

- Formatting information

- RAG content

- Image data in responses

Without a context window, each prompt would be processed in isolation, with no connection to what came before. In Transformer models (used for text generation) and Diffusion models (used for image generation), the context window defines how much of the conversation, image, document, or task the model can "see" and consider when generating its next response. This is enabled by the self-attention mechanism, which underpins the mathematical calculations of the model’s weights.

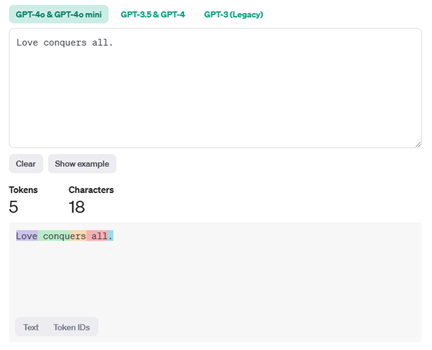

Context window sizes are typically measured in tokens. A token can range from a single character to an entire word, depending on the language and model. For example, the phrase "Love conquers all." might break down into five tokens: "Love", "conquers", "ers", "all", and ".". This example illustrates how quickly a large number of tokens can be generated from relatively short text input and output.

It’s also important to note that uploaded or generated images in responses represent a significant number of tokens, far exceeding typical text inputs.

You can play and see how tokenising works with OpenAI's Tokenising utility that is pretty cool to play with. Hugging Face also provides the Tokenizer Playground to see how different models tokenise text input.

Documents, code samples, images, text all take up room in the context window.

The impact of Retrieval-Augmented Generation (RAG)

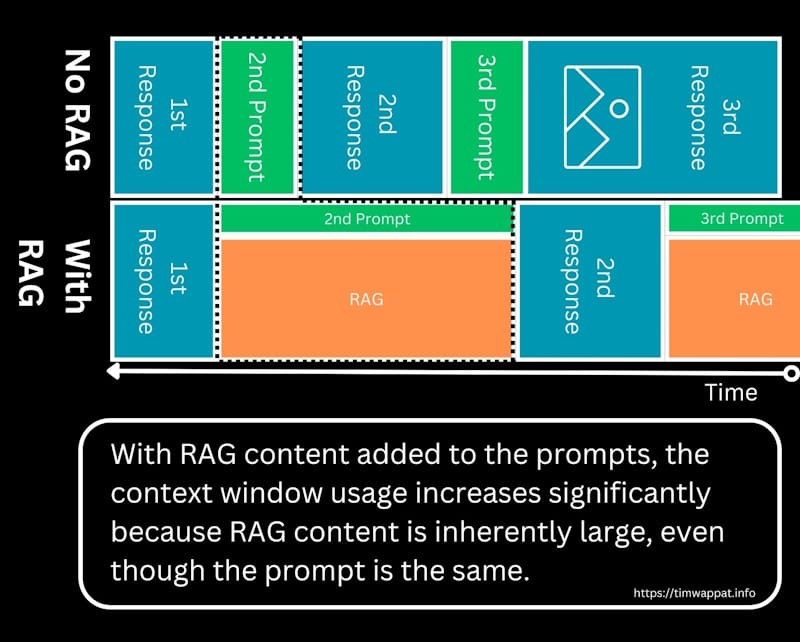

I introduced RAG in a previous post. RAG allows an AI model to combine its trained knowledge with extra information stuffed into the prompt (transparently to the user). This information is often sourced from a vector database, but it can be from live web queries to the open Internet.

The context window size becomes important here because:

- It allows the model to “read” retrieved documents alongside your input.

- A larger context window means the AI can pull in more relevant data and consider it all together, leading to better and more accurate responses.

However, RAG can fill the context window very quickly. It is not untypical to find RAG filling x1000 the token space that the base user prompt occupies, due to the number of external resources that are pulled into the context to support the AI response.

In the RAG context window illustration, RAG's extra content takes up significant space, the prompt size remains unchanged. This extra RAG content pressures available space, reducing room for prompts and responses unless the context window is expanded. With wider adoption of RAG, this is one of the reasons bigger context windows are desired.

Demonstration of rolling context window in operation

The following video I made shows how as prompts and responses are fed into the rolling context, the context fills, eventually causing the first prompts to become unavailable to later prompts as they are pushed out the context.

It also highlights how images take up many more tokens than text responses. When generating images, it does not take long for the context to fill and start losing the earlier prompts and responses. This explains why images could suddenly change after successive generations, due to the earlier prompts being lost out of the AI context. Large computer source code outputs can have this effect to a lesser extent too.

Finally, the video illustrates how RAG (Retrieval-Augmented Generation) can consume a disproportionately large portion of the context window compared to standard user text prompts.

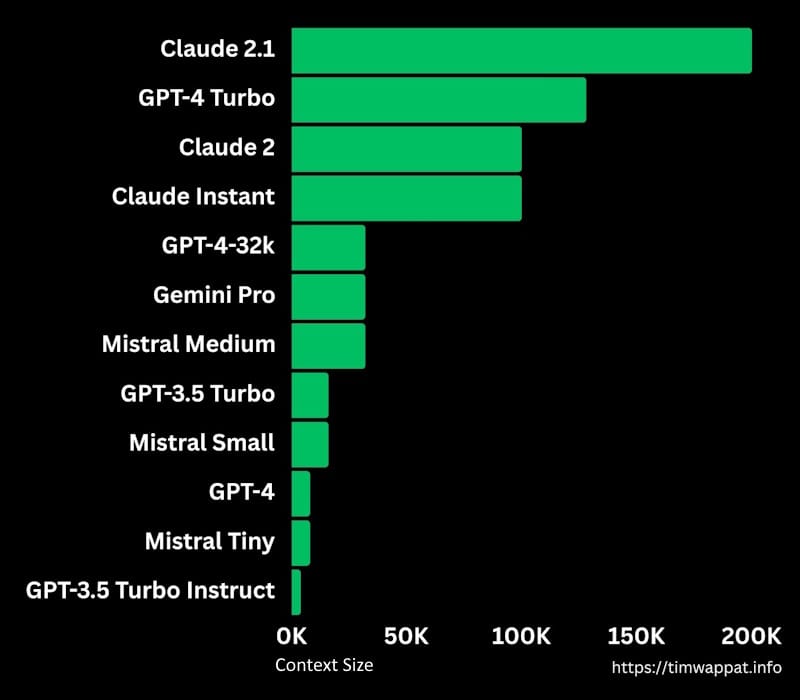

Why are context windows getting bigger?

A number of reasons exist for larger and larger context windows:

-

More complex use cases People want AI to summarise entire books, analyse lengthy legal documents, or conduct detailed brainstorming sessions. Larger windows support this by keeping more data in context at once.

-

RAG is more popular RAG is being used more and more and is driving demand for larger context to allow stuffing the RAG data into the prompt.

-

Competitive edge AI model producers are in a race to provide the most flexible and capable models, and larger context windows are a strong selling point.

Is bigger better?

Older generations of AI implementations had very small context windows, quite tiny, which meant they could not retain much of the conversation. It also limited how much could be pasted into the prompt. So for example only short chunks of text could be prompted at a time.

There is a trend at the moment for increasing context windows, which is being permitted due to big improvements in model generative inference time when generating output. The bigger size allows the contents of whole books or big sections of software code to be ingested into the AI prompt context. This allows easier prompting than having to break it up into small chunks, especially when the whole work needs consideration at one go.

Imagine asking an AI for a summary of a large book. A big context lets it reference multiple pages at once rather than breaking the task into smaller, potentially disjointed chunks, considered in isolation. It is easy to see how knowing the flow of the whole book rather than just stand alone chapters, could drastically change the results of any prompt outputs. This is one of the big benefits of increased size.

An issue with small context windows is that after a few interactions the first prompts and responses become unavailable, falling out of the context window as memory is exhausted. This means having to re-prompt the earlier information to get it back into context. This is seen really quickly when generating multiple images in succession, where with smaller context models, it does not take long for the first prompts to fall out of context. The images then no longer follow those early prompts, all of a sudden changing as the early prompt instructions are forgotten.

Large Context

- Enable handling of longer documents or conversations in a single go.

- Coherent model responses.

- Allow more nuanced understanding by looking at broader context.

- More data to process so increased costs to serve.

- Fewer hallucinations.

- More data with less focus may create "noise" that confuses the output.

You would naturally think, go large? -there are downsides to large context windows too. As AI evolves, context windows are getting bigger – more recently, some models now support tens of thousands of tokens. There are pressures for larger windows. Compute requirements scale quadratically so for every extra token it takes 4 times more processing. The output inference also is challenging as the output grows, the computing of the inference also increases dramatically. So large contexts bring large challenges to servicing the compute. Luckily there have been some recent big leaps forward in inference speed and general efficiencies that enable larger contexts to be developed and deployed.

Lost in the Middle

As context sizes have grown, the lost in the middle problem is a phenomenon that has plagued large contexts. When the context is large it has been found that the content in the middle of the context will get ignored by the AI engine, in preference to that at the start and the end. The precision of results will be drastically reduced if the information required to satisfy the prompt is nestled in the middle of the context window rather than the start or finish. Thankfully some mitigations against this effect have been found such as prompt compression, so this no longer is such a big hindrance.

This model has a 4096 token context size what does that mean?

So put simply your whole world must fit in this size. That is you must do everything within this limit of 4096 tokens. You must ask the quesion, pass any previous history of past questions and answers, RAG and other context content and still have space left over for the next response completion, -all within this 4096 token limit.

Use "new chat" often to reduce context, increase efficiency and save on token useage

The user interfaces for ChatGPT, Claude, and similar models include a "New Chat" button. Starting a new chat essentially creates a fresh session with an empty context. Using the "New Chat" function is important for efficient use of your token allowance and to keep text completions running smoothly.

When chatting with these models, it’s easy to quickly exhaust the limited token allowance provided under your payment plan. Being aware of the context can help extend the length of your usable session.

Make it a habit to use the "New Chat" function whenever your next prompts don’t need the past context. By starting a new chat, the previous context isn’t passed back into the AI model with each prompt, reducing the number of tokens processed. This simple step can significantly lower token usage and lead to efficient model use.

If the AI starts to struggle or give incomplete responses, it can be helpful to ask it to summarise the conversation so far. Then, hit the "New Chat" button and use the summary to seed the new session. This approach can make your interactions more efficient while keeping your token usage under control.

Summary

So the main take away is that when prompting, there is a limited working memory. This can be consumed by images and RAG. Some models give huge contexts others are very small. Be aware that during long interactions AI prompting, context may drop out of the window and you may need to reprompt the AI with your original prompt data to keep it in context.

Future posts will build on this knowledge where we look at interacting with models via semantic Kernel SDK and there we need to handle chat history explicitly.