SSMS SQL Hazard

Let me point out a hazard using SSMS, when developing a SQL delete query, especially more complex ones that take time to build up. I also think about some of the ways I work and how they minimise the chances of damage to data.

Let us say we are developing the simple query to remove orphan records from the prices table of Dynamics GP:

DELETE IV00107

SELECT *

FROM IV00107

LEFT JOIN IV00108

ON IV00107.ITEMNMBR=IV00108.ITEMNMBR

and IV00107.CURNCYID=IV00108.CURNCYID

AND IV00107.PRCLEVEL=IV00108.PRCLEVEL

AND IV00107.UOFM=IV00108.UOFM

WHERE

IV00107.PRCLEVEL='LIST'

AND IV00108.ITEMNMBR IS NULL

…as we develop it, I highlight from SELECT down to NULL and hit F5 to run it. That will only run the “select”, to see what it will be deleting later. Later I would put double dash in front of the select to and run the whole statement to get the delete to execute.

Let us say we have a break, then come back having got the approval to delete the records. So our eye catches the delete and so we highlight from DELETE to NULL and hit F5. Oh no! We have just deleted everything in IV00107! Think about it, the delete and SELECT are interpreted (correctly) as two different operations.

Working this way, developing the record set to remove as a SELECT, then adding the DELETE to the top ready to be ran is a common pattern for me. Although I usually put a double dash in front of the DELETE as shown below to prevent this mistake or the mistake of just hitting F5 during testing, without remembering to select first. The action of uncommenting the DELETE triggers my mind to also then comment out the SELECT.

--DELETE IV00107

SELECT *

FROM IV00107

LEFT JOIN IV00108

ON IV00107.ITEMNMBR=IV00108.ITEMNMBR

and IV00107.CURNCYID=IV00108.CURNCYID

AND IV00107.PRCLEVEL=IV00108.PRCLEVEL

AND IV00107.UOFM=IV00108.UOFM

WHERE

IV00107.PRCLEVEL='LIST'

AND IV00108.ITEMNMBR IS NULL

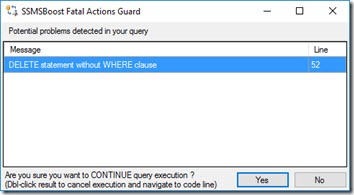

I made this mistake against a test SQL database today, a reminder of why you should develop against a test environment. Luckily I use SSMSBoost, a productivity pack plug in for SSMS. This addin to SSMS will warn when you are executing a delete without a where clause, throwing up a fatal action guard window, so it prevented me from actually causing any damage.

Immediately I could see what I had done and hit the No button.

Another factor that led to this potential mistake was not aliasing my tables like I normally would do, if I had written the following then the DELETE would not have been able to find the table i7 and hence would not have executed and would return;

Msg 208, Level 16, State 1, Line x

Invalid object name 'i7'

DELETE TOP(1000) i7

SELECT *

FROM IV00107 i7

LEFT JOIN IV00108 i8

ON i7.ITEMNMBR=i8.ITEMNMBR

and i7.CURNCYID=i8.CURNCYID

AND i7.PRCLEVEL=i8.PRCLEVEL

AND i7.UOFM=i8.UOFM

WHERE

i7.PRCLEVEL='LIST'

AND i8.ITEMNMBR IS NULL

Yet another practice that would have helped is that I also would normally have the TOP statement in the query. I would then pressing F5 ten times, in this case until there are no more rows to process. I use TOP to make it run faster and not cause lock escalation (we have 2million records in price table). Other times if there is too much to remove from F5, I’d put it in a loop with a @ROWCOUNT check to see if anything is left to process, that would not have stopped a disaster though. In reality a delete on this table would have taken so long I would soon see my mistake and cancel the query before it committed, but I’m showing a principle here in this post. So the TOP statement would have prevented quite so much data loss.

Luckily I didn’t end up with any issues at all, but as is often the case an accident only happens when the perfect storm of factors come together. Keeping to my normal way of working protects me but I thought others might learn from why I work the way I do.

If I had removed the records in production, then it would not have been a bit deal in this case as we restore our production database into our test company regularly and automatically (see my post on how to do this). As these prices do not change much from day to to, I could have just squirted the missing records from that company into production, then restored to point in time the test company and again re-synced the prices from that restore, resulting in no impact on users. This is one of the reasons I’m a fan of having fresh copies of production available in test, also useful for patching up mistakes users make in a timely manner.