SMALL but mighty models is where it is at

Small AI models are cost-effective, fast, and ideal for business tasks. Cheaper to train and run, they work locally, offline, or in IoT devices, ensuring efficiency and compliance. With less energy use and fine-tuning options, they prove that smaller, task-focused AI can deliver big results.

I am telling you this: small AI models are where the future lies for enterprise IT applications. There is a heap of effort and money going into producing massive models that try to encapsulate all our knowledge in one model, but this just isn't cutting it for the needs of AI in everyday business.

These models are hideously expensive to train, build and host, requiring the big players in the cloud to run them on our behalf. The per-token transaction costs seem acceptable until you start running large volumes of data through them—then the costs mount up very quickly!

Where the action in AI is, is down at the other end, with tiny, cheaper models. These models are cheaper to build and cheaper to run. Even hosted in cloud AI, models such as the following are much cheaper to consume and are very capable of simple, constrained business tasks:

| Model Name | Description |

|---|---|

| GPT-4o Mini | OpenAI's smaller, more affordable version of ChatGPT. |

| Claude Instant 1.2 | Anthropic's improved, lighter, and faster version of Claude. |

| Gemini 1.5 Flash | Google's popular AI model among developers, recently reduced in price by over 70%. |

| Molmo | A family of open-source multimodal models ranging from 1 billion to 72 billion parameters. |

| Microsoft's Phi3.5 | An open-source small model that costs little to modify and run. |

| Meta's Llama models | Open-source models that rival larger models at zero cost. |

| WizardLM-2 7B | A smaller, cheaper model suitable for creative writing and educational use cases. |

How small are Small Langauage Models (SML)

There is no firm definition but I feel from my reading that few million to a few billion parameters is what people are calling small. Lets look at the range from a popular model:

| Logo | Name of Model | Creator | Range of Parameter Sizes |

|---|---|---|---|

|

Llama3 | Meta | 7B - 70B |

|

Phi3 | Microsoft | 2B - 10B |

|

Gemma | 1B - 20B | |

|

Mixtral | Hugging Face | 1B - 30B |

|

OpenELM | OpenAI | 3B - 15B |

I feel that 8B parameter and under is small, but those working on tiny devices may think this is huge, so really just be aware that sizes of models are not defined, they are subjective.

Distributed AI and orchestrated models

Groups of cheap, small models collaborating to achieve a task, maybe voting on outcomes, even as local models rather than cloud-hosted, make sense. Especially where compliance or security requires data to be kept locally, or in the case of IoT sensors needing local AI capabilities.

Distributing the models like this and letting them communicate securely means small models can do big things.

Training for the task: Fine-tuning

When working with small models for a specific task, it is important to do fine-tuning of those models to target the models' limited abilities to the task at hand. Although the small models have limited abilities, make no mistake, they are also very capable. The foundational model (base model) will have already been cheap to train relative to the large and supersize models, and some training for specific tasks is a small price to pay and should be relatively easy compared to fine tuning a larger model.

- If it is possible to reduce the task into a simple instruction prompt or set of prompts for a task with lower complexity, then smaller models would be the best choice (generally).

- If you require more complicated reasoning, then you’re going to have to pay for larger model use.

Mobile devices and Internet of Things (IoT)

Another big area of interest with tiny models is running them on mobile devices such as phones and tablets. With smaller models, the memory and processing requirements are drastically reduced, allowing AI capabilities to run on a mobile device, even down in the subway, without an internet connection.

This could allow those AI-enhanced features that currently only work online to work offline, even if that results in slower and lower-quality results. For example, AI photo editing features could be available even if the phone is out of coverage, or voice-to-text functions could work offline. Intelligent sensors and devices on the network are another good use case for small models. Local processing of signals from sensors or CCTV cameras is better done locally if possible, distributing the AI workload. Reducing data transmission keeps it secure—the less data that is moved around for processing, the less likely it is to be compromised.

Neural Processing Units (NPU) in devices

Laptops, CCTV cameras, tablets, phones, etc., are going to start seeing specialist hardware chips (NPUs) to allow models to run on them. This will make small models viable for these categories of devices, helping the smaller models perform faster.

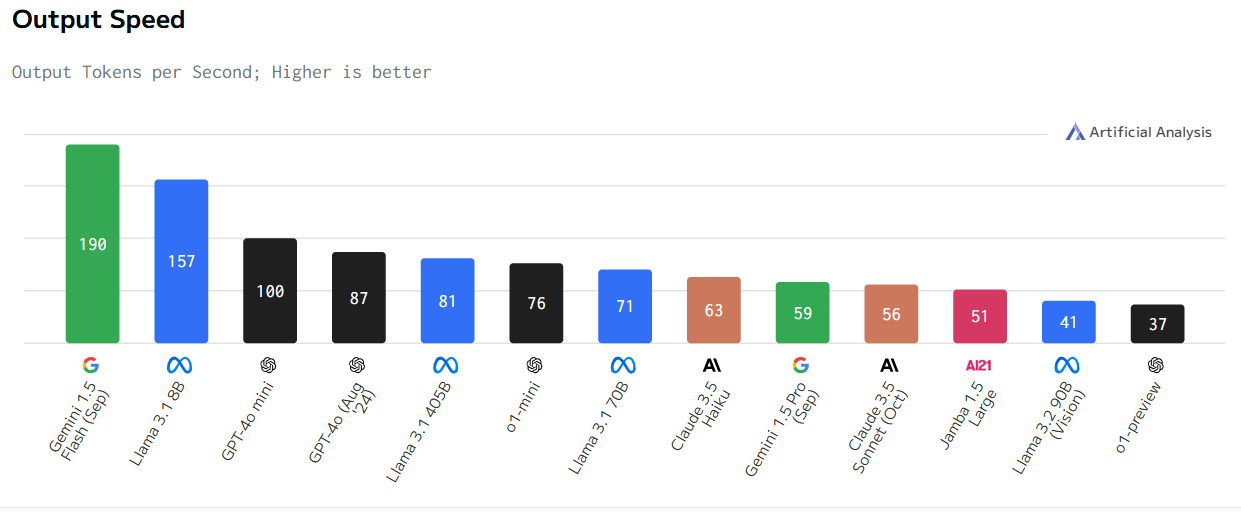

Speed of small models

Small models actually have significant speed advantages over larger models, especially when running them locally. Less compute and memory are required for these small models, making them very responsive with suitable hardware for providing quick responses to targeted, focused tasks.

Small models means efficiency

The number of parameters, the individual numerical values that define the model's behavior is what is typically used to compare model sizes, and this is the number you will see against models in libraries of models.

It may be dull and dampen the mood, but the environmental impact of AI is huge. The projections for power consumption of all these AI applications are truly eye-watering, and that is just from the pollution they will create.

It is seriously important we design with efficiency in mind and avoid using bigger models than are required, as they burn more energy to produce very similar results. Choose the model size appropriate to the use.

There is a tendency for developers and users to think that bigger is better and choose the biggest and newest models to run their prompts or AI workloads. This is not the case—the model type and size should really be matched to the application it is to be used in. This creates the most efficient outcome for the work completed.

Small suits running as local models

There is another post about local models, but small models, as you have seen from everything already discussed, are well-suited to being used as local models. When resources to run them are limited, or compliance or security dictates data be kept local, these needs and requirements lend themselves to local model use, which in turn requires smaller models.

Lightweight Quantized Llama 1B + 3B models

These tiny models can be ran on a mobile phone or in a web browser while off-line. They are heavily optimised allowing them to run on portable small devices, a preview of what is to come.

Price & performance comparison

https://artificialanalysis.ai/leaderboards/models

This site offers a great insight into relative costs of models and other metrics relating to model comparisons.