Using Visual Studio Database project Dynamics GP & source control

Source control of all aspects of a Dynamics GP solution is essential to deliver a reliable, consistent software product or system to the end users. Getting life cycle control of custom objects in the SQL database can be a challenge, even more so when looking at a typical Dynamics GP SQL server database, that contains many thousands of objects. I have seen many SQL tools struggle when pointed at a typical GP company database. It seems that from my use of SQL Server Data Tools that it has issues too. In this post I go on an adventure exploring the tools in relation to Dynamics GP, the learning can be applied to other databases than GP too.

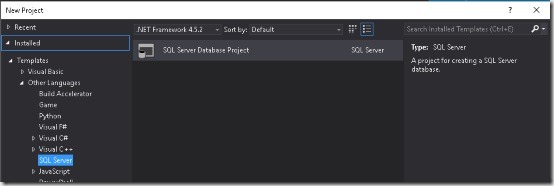

Visual Studio offers SQL Server Data Tools, managed though the extensions menu of Visual Studio, it adds the concept of a SQL Server Database Project under the new projects menu. This experiment failed in that it took too long for schema comparisons on the current version (14.0.60629.0), but lets hope the comparison tool is working more quickly in future releases.

Setting up SSDT for Dynamics GP

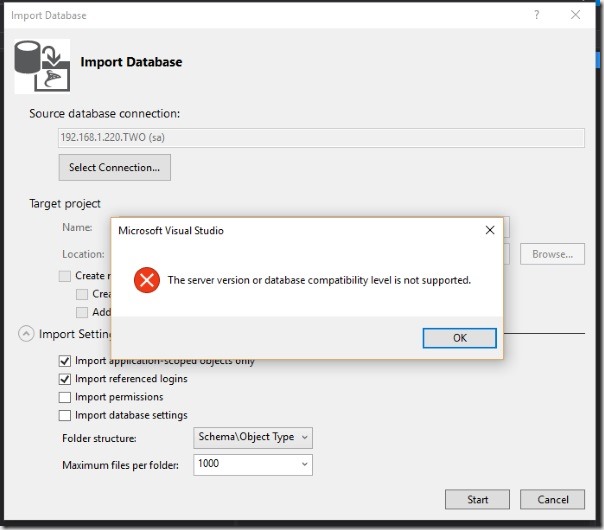

Adding this project and importing the GP company database (or any other database) brings all the “create scripts” for the objects of that database into the project. It should be noted that it is worth checking the most recent SSDT has been installed and updated or messages such as that below can occur.

Import

The existing database Dynamics GP database can be imported into the project by right clicking the project title in the solution explorer and going into the import window as shown above.

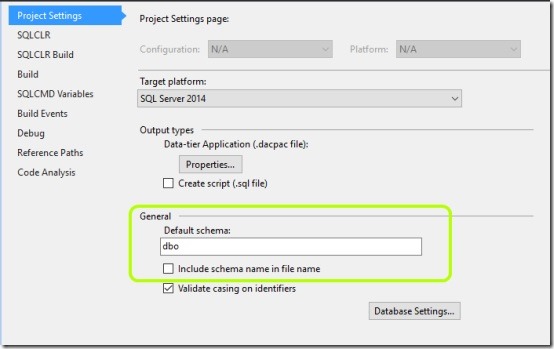

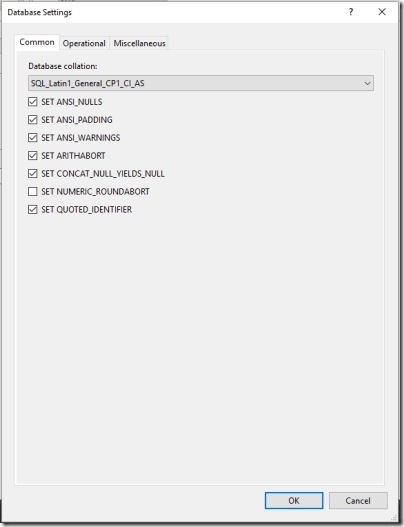

Note that behaviour of the project is governed by some settings in the database project settings page, Project Menu>> Database Project Settings. Where for example the schema name can be embedded into the file names generated. For those SQL nerds out there, as the Database Projects can create the database when used in green field development, database core settings are found behind the Database Settings button also illustrated on screen shot below, this is not really relevant to the majority of users for this discussion.

SQL CLR Builds can be controlled by the project too, see the SQLCLR Build tab of the settings for more detail, again most users reading this will not be going there.

After import

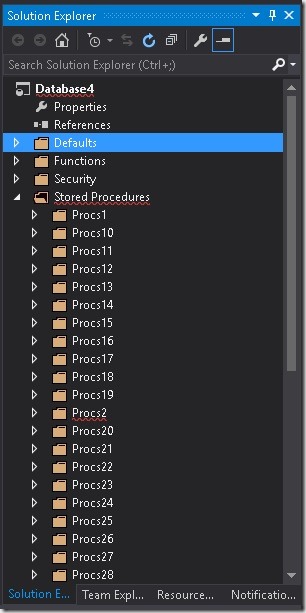

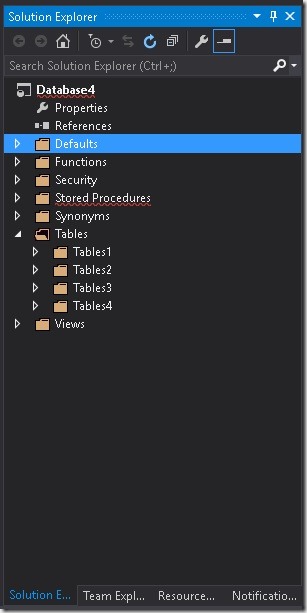

The organisation of the generated object tree can be changed by using the folder structure drop down list at import, where options as to how the objects are stacked hierarchically in the object tree can be decided. There is also a maximum of one thousand files allowed in any of the generated folders holding the SQL objects on disk. This is a limit imposed by the data tools team and I am unaware of any way to increase this number. I assume this is to keep the application performance acceptable in use. Shown below are the “Procs1..” folders generated, each containing 1000 of the GP stored procedures. There are similar folders generated for Tables and other objects under each node.

Needless to say, due to the large number of objects in the GP database, there will be many of these folders under each of the nodes. I find this really annoying as I’m used to the view in SQL server management studio that has all the objects listed directly under each node, this makes finding objects much more difficult, but we still have the usual “find tools” in visual studio to help.

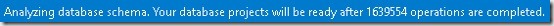

After import the tool status bar will show the following message and the number of operations will keep climbing to a worrying high level, but eventually it works its way through them all and you are ready to go. The number went up to 1.6 million and then dropped back down to nothing, and it took a while (approx 20 mins) to do so on my infrastructure. I have seen this process adversely load a production database so would recommend sticking to development databases where possible.

The large number of objects in the GP database makes SQL data tools choke or perform slowly on some operations, just be patient, some processes can seen very sluggish and delayed when running against GP databases. Operations such as right clicking the project and creating a snapshot cause Visual Studio to hang for prolonged periods of time, with no UI feedback about what is happening, however mostly the operation will eventually complete, in this example after about two minutes.

Once loaded into the project, the create scripts for all the objects in the database become available as .SQL files in a file structure on disk as represented by the solution. The project can then checked in the TFS source control, to achieve document control over the objects.

Schema Compare

The schema compare feature is crucial for GP usage as it allow the GP databases to be compared to the project to see what changes there have been since the last compare or import. This is important as not all objects in a enterprise GP database are in the developers control. The tooling allows for the changes to scripted out into SQL change scripts, thus the change scripts can be added to the code project as upgrade scripts for deployment purposes or pushed using continuous integration into a test rig. Continuous integration and GP? – I can dream!

Performing the compare, right click the project title in solution explorer and select schema compare, then set the source and destination, these can be databases or projects.

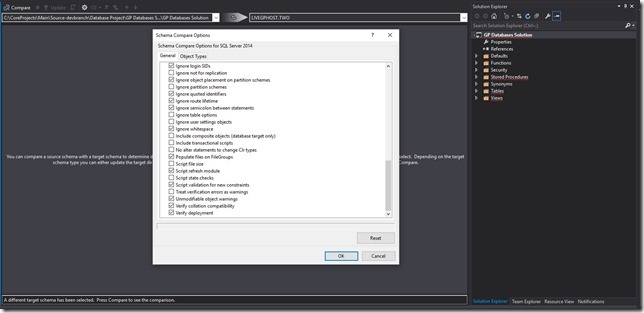

It is vital to click the gear icon and examine the compare options. The compare options are very powerful and can mean the difference between picking up on a change or not doing so, difference between your application being deployed with a missing object or not!

In this window there are options to ignore changes that you do not think will affect the operation of the application, such as “Ignore semicolon between statements” but others can radically change what objects are checked.

Here is the full list of options available in the compare options window:

Allow drop blocking assemblies

Allow incompatible platform

Backup database before changes

Block on possible data loss

Comment out SetVar declarations

Compare using target collation

Deploy database in single user mode

Disable and reenable DDL triggers

Do not alter Change Data Capture objects

Do not ALTER replicated objects

Drop constraints not in source

Drop DML triggers not in source

Drop extended properties not in source

Drop indexes not in source

Drop objects not in source

Drop permissions not in source

Drop role members not defined in source

Drop statistics not in source

Generate smart defaults, when applicable

Ignore ANSI Nulls

Ignore authorizer

Ignore column collation

Ignore comments

Ignore cryptographic provider file path

Ignore DDL trigger order

Ignore DDL trigger state

Ignore default schema

Ignore DML trigger order

Ignore DML trigger state

gnore file and log file path

Ignore file size

Ignore filegroup placement

Ignore fill factor

Ignore full text catalog file path

Ignore identity seed

Ignore increment

Ignore index options

Ignore index padding

Ignore keyword casing

Ignore lock hints on indexes

Ignore login SIDS

Ignore not for replication

Ignore object placement on partition schemes

Ignore partition schemes

Ignore partition schemes

Ignore quoted identifiers

Ignore route lifetime

Ignore semicolon between statements

Ignore table options

Ignore user settings objects

Ignore whitespace

Include composite objects (database target only)

Include transactional scripts

No alter statements to change Clr types

Populate files on FileGroups

Script file size

Script refresh module

Script state checks

Script validation for new constraints

Treat verification errors as warnings

Unmodifiable object warnings

Verify collation compatibility

Verify deployment

Now click compare, go make a coffee, cocktail or whatever does it for you while the tool does it work. Some time later the results will be returned showing all the objects that differ between databases. In fact it only took a twenty mins to complete on my system with the settings I had selected the first time, with the project un-built.

To get a reliable comparison the project must successfully build. Later I describe how to get the project to build, it was after a successful build I found the next problem.

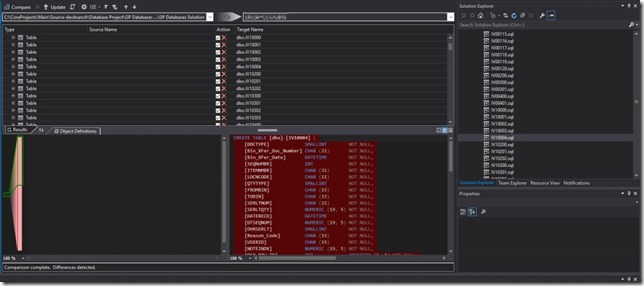

Running the SSDT Compare tool back against the origin database would cause a hang at "Initializing comparison, Loading...". So find something lengthy to do, like painting the house or going to visit friends as leaving it for 12 hours did help, after which it did return results as shown below.

It seems that the comparison can't cope with the GP database size. This is a massive issue as without the comparison back to the current database state (or other database states if used for test or dev), maintenance of objects that require check-in due to changes becomes manual in nature, invalidating the whole purpose of the tool in respect of GP projects.

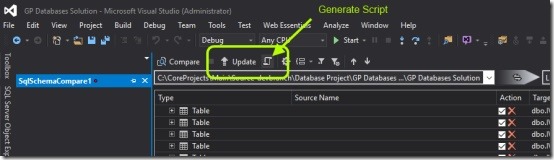

In the screen shot you can see the Update and Generate script tool buttons. With GP we would not want to use the UPDATE button as this would attempt to run the selected scripts against the target database. Instead script out the update, this will create SQL script representing the selected object changes. Check the generated script over, it is auto generated and may contain some undesired SQL. Once verified this change script can be checked into source control itself and used as a record of upgrades to the database or distributed for ops teams to apply to the database that requires syncing.

The tools could be used in one off reconciles of databases -if only the tooling worked for GP sized databases more quickly! Other tasks could include finding where Dynamics GP upgrades have not updated some database objects correctly, by comparing to a known fresh install the tooling may help identify the problem objects, or now orphaned objects in the database, left by legacy VAR applications, no longer in use.

I have used other SQL comparison tools that did struggle with GP but eventually worked, so this experience with the schema compare tool is not unexpected, a pity this takes so long to run.

Source Control

Although the database projects can be used without adding them to source control (makes sense for one off tasks). My objective is to add the custom GP objects to source control for all the same QA, productivity, backup, documentation, lifetime management reasons that software source code is already held there for.

There is not a lot to say about source control, it works the same as your other projects, add the solution to source control and the changes can be checked in and managed in source control exactly the same as with any other project. This makes it sound unexciting, but the power is the full traceability of the changes to database objects over time, with check in comments, work items associating them with tasks etc. It also gives visibility to the wider team members of objects being edited preventing or bringing awareness of two team members trampling over the same objects at the same time.

It is also useful when working with multiple customers' databases, to keep a track of custom customisations and be able to restore a test and development database with the operational database objects for your customers current setup. Obviously in this case you will not normally have the ability to import the customer database, but your development database can manage your own objects that exist at the customer sites.

While we are on the subject, remember to add your SSIS projects and SSRS projects to source control too!

Building the project

If the SQL was our own as part of a green field development project, we would build the project regularly, as building checks the object dependencies and validity of the SQL objects (database).

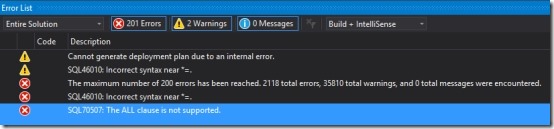

However we have inherited a very, very large SQL project by importing GP databases, it is not realistic to expect them to build. Although it is possible to control the database without it building, ideally it should be built. The problem is GP has some depreciated (and complicated) TSQL in its database that causes errors (rather than warnings) when a build is attempted. An example from the screen shot is the “ALL” clause for TSQL is depreciated for REVOKE and generates the error shown. Also note we have 2118 errors and 35,810 warnings overall, sorting this out is not possible or reasonable, as those are not objects we can change as they are part of the application.

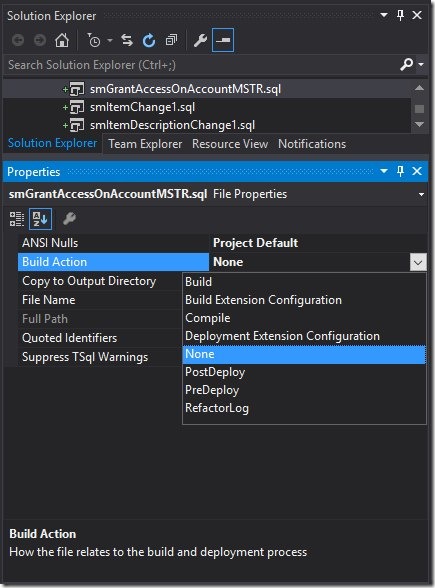

Objects can, as with c# or vb.net, be excluded from the build. Here we see the smGrantAccessOnAccountMSTR.sql that is causing that previous error regarding the “ALL” clause. By setting the build action to None it will be removed from the build and hence the error list, however this is going to be a long process for all objects!

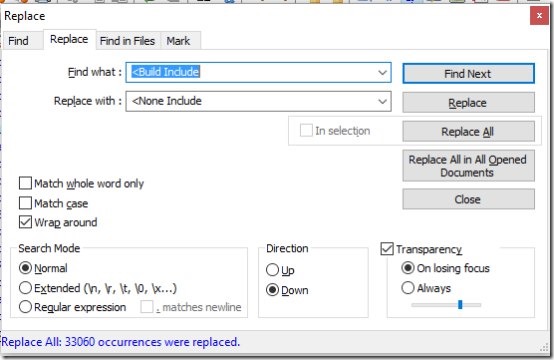

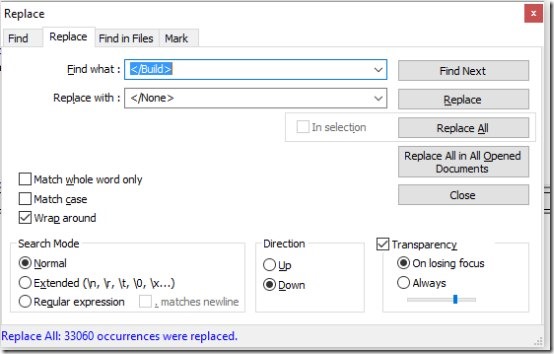

It is possible to exclude ALL objects from the build by editing the .sqlroj file, editing the XML to exclude all. To do this I used Notepad++ to do a search and replace. It is worth checking your file before actually doing the replace to ensure no other nodes get caught up, if they do I’m certain a regular expression search and replace could be used to more accurately target them.

First replace the “<Build Include” tags with <None tags, like so…

Then deal with the non-self closing tag like so…

Having done this and reloading the solution, it builds, not a surprise as nothing is actually being built anymore!

For those of your into continuous integration and can get the project to build (indeed even if you are not into CI), the post Continuous integration with SQL Server Data Tools and Team Foundation Server is a good read to see what is possible with publishing the project.

Notes

Although technically I can get the objects controlled by source control, having to wait twelve hours for the comparison to complete is not helpful, and makes it almost impractical to use.

To say I'm disappointed is an understatement. However the principles of this exercise are applicable to other databases, and perhaps a future version or service pack may may make the tooling usable.

I will keep an eye on this product as I think it could be very useful when it works more quickly.