Logic of thought prompting

Logic of thought prompting is a technique used to force the AI out of speedy responses and in to making a more considered answer with significantly more thought and detail.

The basics of prompt engineering were introduced in a previous post, What are AI prompts and what is prompt engineering?. Once the basics are under your belt, it’s time to roll up your sleeves and learn some precision prompting techniques that can target particular types of problem-solving.

Logic of Thought Prompting (LoT)

Logic of Thought is a progression of Chain of Thought (CoT) prompting, previously explained in the earlier post.

LoT is a niche prompting technique to be used only when targeting a response that is rooted in logic. LoT will guide the AI through much more processing to achieve a richer result, but it will be slower. It is inappropriate to use this prompting style for general purposes. Keep it in your repertoire of prompting techniques for when it is required.

OpenAI has made Chain of Thought embedded directly into the o1 reasoning model by default, allowing for better results in the workloads o1 is designed to handle. Thus, it’s helpful to understand how this works, as it can still be applied to older and other AI models via manual prompting.

How Does It Work?

Imagine you’re trying to explain why the sky is blue to a small child. You don’t just jump to "because of light scattering"; instead, you go through it step by step, using simple rules like "light comes from the sun, and it bounces around in the air." This demonstrates the logical path to them. In doing so, you help them think more about the problem by breaking the process into multiple stages rather than quickly jumping to a conclusion.

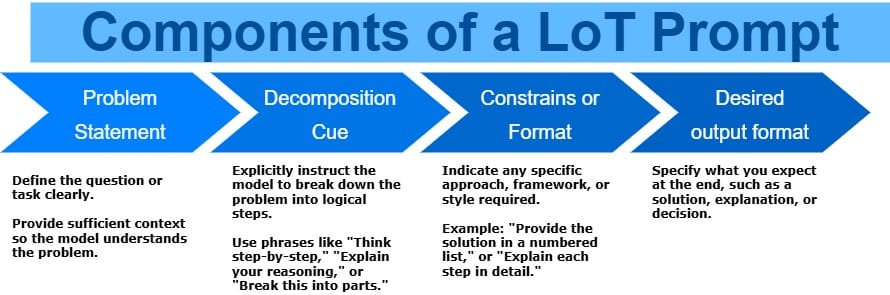

Logic of Thought (LoT) prompting is about helping AI connect concepts and reason in a logical way, just like this. Instead of merely following steps or patterns, it ensures that each thought leads naturally to the next, arranging result stages in a sensible order. This approach makes the AI’s answers use more computational power, producing more thoughtful and realistic results, and creating a logical flow from start to finish.

This process forces the AI to spend more time "thinking about the problem," combating the default behaviour it is programmed with—to provide an answer quickly, which may not always result in optimal responses.

It is not enough to instruct the AI to simply "be logical" in its response. As with all prompting, it is necessary to explain what you mean by being logical. People naturally tend to be lazy and less verbose in their prompting. You must explicitly tell the AI to break the problem into logical stages.

Example

-- A train travels 60 miles in 2 hours. Then it travels 90 miles in 3 hours. What is the train's average speed for the entire journey?

-- Instructions: Think step-by-step to find the answer. Break the problem into smaller parts: calculate total distance, total time, and average speed.

Breakdown of the Logic of Thought

-

Restate the Problem:

- What do we need to find? (The train's average speed.)

-

Decompose the Problem:

- Step 1: Calculate the total distance traveled.

- Step 2: Calculate the total time taken.

- Step 3: Use the formula for average speed: total distance ÷ total time.

-

Perform Calculations:

- Step 1: Total distance = 60 miles + 90 miles = 150 miles.

- Step 2: Total time = 2 hours + 3 hours = 5 hours.

- Step 3: Average speed = 150 miles ÷ 5 hours = 30 mph.

-

Provide the Final Answer:

- The train's average speed is 30 mph.

Model Output Based on the Prompt

- The train travels 60 miles in 2 hours and then 90 miles in 3 hours.

- Total distance traveled = 60 + 90 = 150 miles.

- Total time taken = 2 + 3 = 5 hours.

- Average speed = Total distance ÷ Total time = 150 ÷ 5 = 30 mph.

Answer: The train's average speed for the entire journey is 30 mph.

See how it broke the problem down and thought a lot more about it. In the right circumstances this can be a powerful prompting technique.