What are the Differences Between Instruct, Chat, and Chat-Instruct Models in LLMs?

When downloading or selecting models, you will see them named instruct, chat, chat-instruct models, what are they and what are the differences?

TL;DR: A base model provides raw text autocomplete requiring careful prompting. Instruct models are tuned from the base model to follow specific instructions, like ChatGPT does.

Large Language Models (LLMs) are essentially sophisticated text completion tools, predicting the next word(token) in a sequence based on a prompt. If you prompt a basic LLM that hasn't been trained to "follow instructions" or "chat," the response can seem incoherent. The base model’s training data is likely not conversational or instruction-based, making natural dialogue difficult.

How can something generting the next token (word) possibly generate a responses that can engage in human conversation from pool of words from fact based training? We will see the difference between text completion and chat completion.

When interacting with a raw, untuned model, the experience is similar to using autocomplete in a search bar. You must provide the starting words, and the model completes them. However, if we want models to understand commands or hold a conversation, they need extra tuning.

To overcome this and make the models more useful for instruction driven and chat driven uses, the base models are further trained on training data to mould the output from the models to be more natural dialogue in nature.

Chat Model roles

Chat models are trained on question-and-answer dialogues and natural conversations, so they can handle back-and-forth exchanges. This extra training makes them more responsive to conversational input, producing replies that feel natural and align with human preferences for languge, producing reponses that are more helpful and safe. They’re better at keeping context across turns and responding appropriately within the flow of dialogue.

Instruct Model roles

Instruct models, are fine-tuned for task-based interactions. Their training augments the base model with a corpus of training instruction-response pairs, teaching them how to understand direct commands and solve tasks. This process, known as Instruction Fine Tuning (IFT), makes them better suited to handle instructions rather than casual conversation. If you're interested in technical details, look up Reinforcement Learning with Human Feedback (RLHF), which helps further refine these models to follow instructions reliably.

For both types, the training helps arrange language components in ways suitable for either chatting or following instructions. In practice, this means you, as the user, provide one half of the conversation, while the model fills in the other half, drawing from its training pool. Remember this is all about getting the model to output from its pool of token nodes in the model in the correct order for a good desired response.

Unlike traditional models that might require implicit cues from the context, instruct models can interpret and act on clear, direct instructions.

The Role of System Prompts

These models also use a special prompt structure. The "instruct" prompt template helps the model distinguish user text from its own, clarifying who is "speaking." There’s also a system prompt that can adjust the overall response style, allowing for adjustments like a specific writing tone or level of detail.

The instruct template, helps distinguish user text from the machine text, allowing the LLM to differentiate between its own messages and the user. There is also a system prompt, that overlays your individual prompts, which allows the user to tune the way the AI outputs texts for all prompts given to it, for example giving it a specific writing style or making it write terse or verbose messages.

Specialization and Flexibility

Models can be trained for all sorts of specialist roles, such as chat or instruction driven roles. The models are adaptable within and outside the role they were optimised for. Just because a model was trained for chat does not mean it can not respond to instructions, merely the appropriate models will be optimised for the task it was trained for. When all is said and done, the labels such as "Instruct" are merely a label to aid model selection for users chosing betwtween models for use cases. There is overlap and they are not totally different beasts.

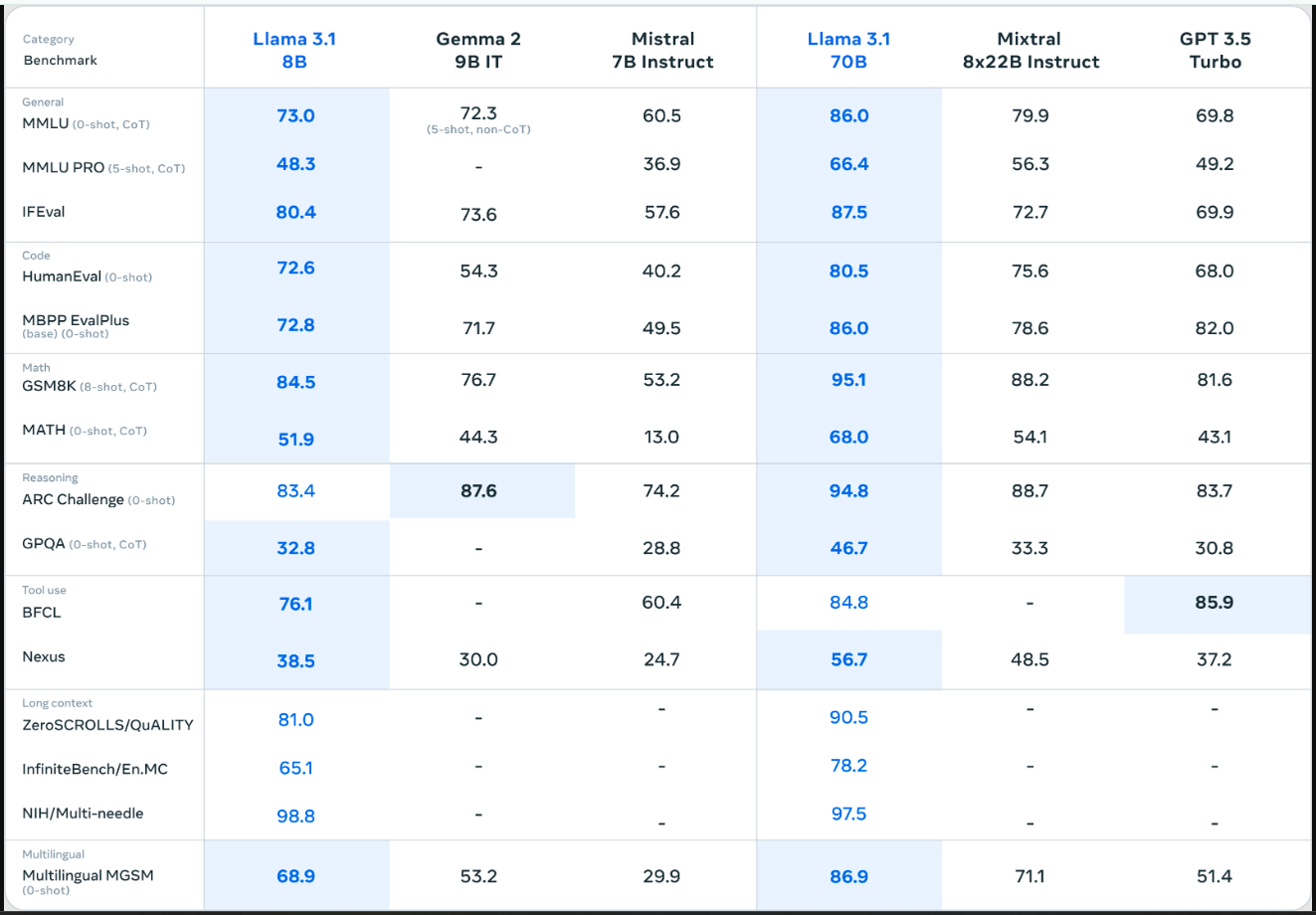

Performance and Benchmarking

Model creators constantly refine their tools to compete both within their company and with others. Benchmarks help, though models may be trained with particular metrics in mind, potentially influencing the training data.

If further customization is needed for a specific use, building on top of a suitable instruct or chat model as a base is often the best approach to build of top of experts that built the underlying models.

What is an Epoch?

You might come across the term "epoch" in machine learning. An epoch is one complete pass through the training dataset during the training process. Typically, models undergo hundreds of thousands of epochs to learn effectively.

There are many more posts on AI learning here—feel free to explore!

This basic overview I hope helped introduce some of the concepts and terminology that you will encounter when getting started with AI. Is is part of a series of posts on AI concepts.