Yes you can - SQL Server Table Compression and Dynamics GP

Today I attended SqlBits in Manchester UK, where there was a session “Performance tuning SQL server on crappy hardware” by Monica Rathbun.

Monica has a the fast and punchy presentation style I enjoyed. Although I had already experienced or knew most of what was covered it was still a good presentation. There was one take away I noted in my notebook to comeback to later. Now back at the hotel I’m having a look.

Monica was promoting the use of COMPRESSION – not just backup compression but ROW/PAGE database compression in the database engine itself.

By compressing the data in the database, the theory goes that you reduce I/O required to move the data around and allow much more relevant data to be held in SQL server’s caches and perhaps the underlying storage system’s caches too. Having more data in memory leads to a more performant system.

For some reason the existence of compression in the database as was something that had slipped under my radar, perhaps because it used to be an Enterprise feature but now its available to me in our SQL2016 Standard Edition.

This is particularly interesting to Dynamics GP users as our database is full of padded CHAR data types, has very wide tables full of only partially used data (depending on modules used) or repeating data in the case of settings flags. Dynamics GP also has many tables full of decimal columns that are all zero, again due to configuration or options in how GP is set up or what modules are active. So from the outset it feels like Dynamics GP would benefit.

“Enabling compression only changes the physical storage format of the data that is associated with a data type but not its syntax or semantics”. This means the compression occurs inside the SQL engine but is transparent to the application interacting with SQL server. There are two levels of compression of interest and available to us. ROW compression takes each data row in the table,

-

It uses variable-length storage format for numeric types (for example integer, decimal, and float) and the types that are based on numeric (for example datetime and money).

-

It stores fixed character strings by using variable-length format by not storing the blank characters.

So imagine how much room can be saved when you consider the fields in Dynamics GP are fixed length!

What is more there is another option, PAGE compression that looks at repeating data within the pages of data stored on the filesystem and compresses that data. As this is over an entire page its more heavy on CPU resources but is great where there is a lot of repeated data down the rows of a table. Wait, repeating data down rows of a column? – That is what we get lots of due to status flags and little used fields in the GP tables that vary little from top to bottom of the table.

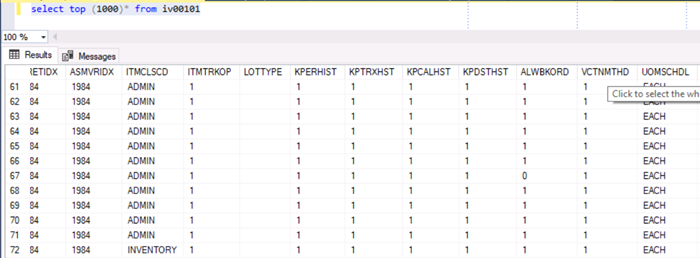

Just look at something like Item Master table IV00101 or one of the pricing tables etc. There are distributions and settings that are the same, repeated for all items and are ripe for compression as this leads to repeated content in the pages.

So both the nature of the data in the tables and the use of compressible data types by Dynamics GP sure makes it look good for compression.

Compression does cause more CPU load, but unless you are pulling millions of rows then it seems insignificant, see more here:

https://sqlperformance.com/2017/01/sql-performance/compression-effect-on-performance where it is proved it has little effect.

We can run

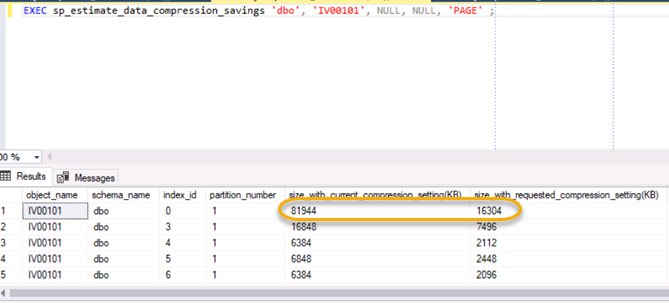

EXEC sp\_estimate\_data\_compression\_savings 'dbo', 'IV00101', NULL, NULL, 'PAGE' ;

This will show us, by sampling a subset of the table, much as the statistics does, how much space should be saved by compressing the table, without having to actually do it. Let try with Item Master in Dynamics GP.

SELECT COUNT(\*) from IV00101

EXEC sp\_estimate\_data\_compression\_savings 'dbo', 'IV00101', NULL, NULL, 'PAGE' ;

So we can see the item master table goes from 81,944KB to 16,304KB that is only 20% of what it was!

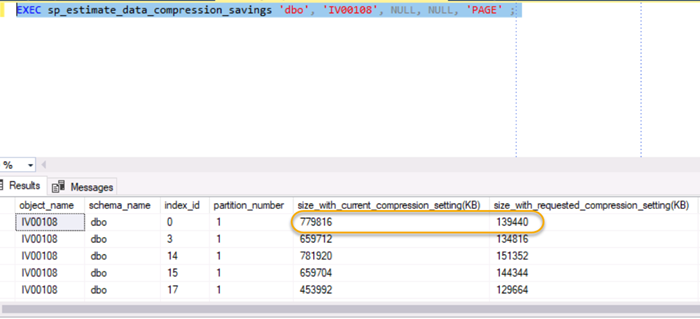

No trying it with IV00108 that has SELECT COUNT(*) FROM IV00108 = 6,107,169 rows and we get 779,816 going down to 139,440, that is only 19% of what it was before.

So you can see how much saving can be achieved this way, imagine the reduced I/O from having 20% of what used to be read.

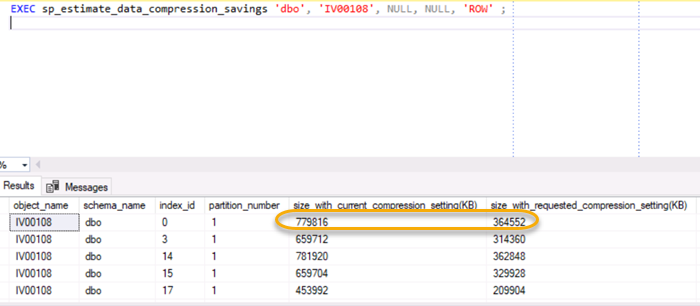

Even going down to ROW compression gives you only slightly less compression but less overhead too:

Only 46% of what it was with row level compression.

Downside

There are not many downsides. The first is technical, compressing the data take up CPU, most SQL servers are not CPU bound in terms of resources, so this should not be an issue. Typically 10-30% increase, so check your current CPU load. As this is a table by table selection, you could tackle only the main most sizable tables in GP to get the majority of the benefit without having to apply compression to every table and index. When the data is written you take a hit on compressing it to reap the rewards later. So tables and indexes with great numbers of inserts per second may cause issues (have to be big loads).

The article below has some good scripts to see what will work and what will not…

https://thomaslarock.com/2018/01/when-to-use-row-or-page-compression-in-sql-server/

Upside

Much smaller data means less I/O more in cache. And more data in memory to make for more efficient queries.

Summary

I am going to gradually add tables to compression and see what happens to CPU usage. The benefits should be substantial in terms of reads so it seems well worth pursuing.

Support

This article would indicate its supported for Dynamics GP, although the tool referenced for choosing tables to compress is no longer available, however it is possible to manually work with the database to turn on compression.

https://blogs.msdn.microsoft.com/nav/2011/07/21/sql-server-data-compression-and-microsoft-dynamics/